ESRs Description

ESR 1

Development of ultra-thin multi-steerable catheter technology suited for soft-robotic navigation through complex vascular structures

Development of ultra-thin multi-steerable catheter technology suited for soft-robotic navigation through complex vascular structures

Combining experience on intuitive human-machine interfaces (HMIs) for MIS with know-how on the development of mechanical hand-held steerable catheters for complex interventions in the heart, ESR1 will push the current technology frontiers further by developing a new class of multi-steerable catheters controlled by electro-mechanical soft-robotic actuators. The research will have two main goals:

- to combine soft-robotic actuation with advanced steering technology inspired by clever design principles from nature (octopus, wasp) to develop novel ultrathin multi-steerable catheters for navigation through complex lumens.

- to include knowledge on human depth perception and eye-hand coordination, to optimize HMIs to allow intuitive control overthe multi-steerable catheters designs from 3D control actions and 2D CT/MRI images.

Collaborating with clinical experts, the soft-robotic catheter technology will enable complex vascular interventions (e.g. treatment of chronic total occlusions) or stress-free passage through narrow ureter. Cases which are hard to carry out with existing technology. The catheters will be evaluated ex-vivo in accurate anatomic 3D-printed lumen models.

Main institution and supervisor: TU Delft, P. Breedveld

Secondary institution and supervisor: KU Leuven, E. Vander Poorten

ESR: Fabian Trauzettel

ESR 2

Magnetic driving and actuation

Magnetic driving and actuation

When dealing with soft, miniaturized and elongated instruments for endoluminal and transluminal applications, actuation technologies are limited. On board actuators are difficult to miniaturize and to integrate, possibly leading to safety issues. Tendon drive systems suffer from friction and they often require very large external actuators. Wireless driving solutions based on magnetic dragging, triggering or anchoring are for these reasons considered extremely promising.

ESR 2 will analyse in which districts magnetic actuation can be feasible, it will seek to determine optimal strategies and combinations of hybrid actuation schemes for each target anatomy. Based on patient-specific images, the scaling of magnetic forces and torques can be more or less favourable.

In addition, the ESR will explore different magnetic sources, based on permanent magnets and electromagnetic solutions. A taxonomy of magnetic mechanisms for triggering, dragging and anchoring will be prepared. Such can be extremely useful for the different surgical scenarios.

Main institution and supervisor: SSSA, A. Menciassi

Secondary institution and supervisor: KU Leuven, E. Vander Poorten

ESR 3

Distributed proprioception for safe autonomous catheters

Distributed proprioception for safe autonomous catheters

The interaction between flexible instrument bodies and surrounding deformable lumens is intrinsically complex and hard to oversee if not explicitly measured. ESR3 identifies and integrates optimal sensing technology for real-time proprioception along the deformable body.

This project includes real time shape estimation based on optical measurements such as FBGs or relying on electromagnetic (EM) tracking. It explores new sensing methods such as electrical impedance tomography (EIT) or algorithms that estimate the contact location and amplitude of the interaction force based on the acquired shape estimates. Automatic calibration and registration techniques will be developed, so that resulting techniques may be employed ‘out of the box’ requiring minimal effort and tuning prior to deployment.

Applicants should have a MSc/MEng (or equivalent) in Engineering, Computer Science, Mathematics or related disciplines.

Main institution and supervisor: KU Leuven, E. Vander Poorten

Secondary institution and supervisor: SSSA, A.Menciassi

ESR: Xuan Thao Ha

ESR 4

Intraluminal sensing for autonomous navigation in remote district

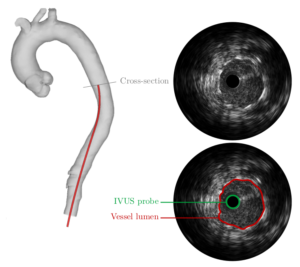

ESR4 analyses and select the most appropriate intraluminal sensing solutions for the different body targets. More specifically, for vascular applications on board sensors such as e.g. force sensors on the catheters’ tip and intravascular ultrasound (IVUS) can be combined with external monitoring solutions (e.g. fluoroscopy). For endoluminal and transluminal applications new developments with chip-on-tip devices (e.g. NanEye) or dedicated CCD and CMOS cameras, or use of OCT will be considered.

If needed methods to enhance the visibility can be explored (e.g. employing a transparent silicon-like blob ahead of the camera); accelerometer, gyroscopes and magnetic sensors will be used for understanding the position of the internal instruments as regards an external frame. pH sensors could be used for understanding the position of the device based on the sensed pH in the target area.

All these sensors are geared to provide maximal information about the surroundings that can be fed into algorithms that aim to reconstruct the surroundings realistically.

Main institution and supervisor: SSSA, A.Menciassi

Secondary institution and supervisor: UNISTRA, P. Renaud

ESR: Sujit Sahu

ESR 5

From local sensing to global lumen reconstruction

From local sensing to global lumen reconstruction

This ESR develops techniques to reconstruct vascular lumens from a selection of sensor modalities, including: IVUS, EM, shape and forward looking OCT. Through sensor fusion the methods will be made robust against occlusions, artefacts or disturbances. Machine Learning methods will be adopted to extract relevant anatomic features and establish correspondences. Mosaicking methods are adopted to stitch local features to a global representation. In a 2nd round, the deformation of the lumen (due to physiological processes or interaction with the passing instrument) will be incorporated. This leads to 4D modelling (3D+time). Energy-based methods that

minimize the total energy of the combined instrument/vessel structure seem most suitable.

The lumen represented by its centreline will be deformed by local models such as e.g. a cylinder-model fitted through the latest sensor data, the instrument shape or physiologic process models. ESR5 further extracts relevant measures directly from the raw data to provide guidance cues e.g. distance to an anatomic target to be avoided.

Main institution and supervisor: KU Leuven, J. Vander Sloten

Secondary institution and supervisor: UNIVR, P. Fiorini

ESR 6

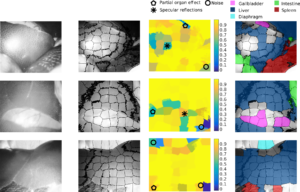

Computer vision and machine learning for tissue segmentation and localization

Each flexible robot will be equipped with extero- and proprioceptive sensors (such as FBG) in order to have information on position and orientation, as well as on-board miniaturized cameras. Additionally, RT image acquisition will be performed using US sensors externally placed in contact with the patient outer body. In order to track the position of the flexible robot and to simultaneously identify the environment conditions RT US image algorithms will be developed. Deep learning approaches combining Convolutional Neural Networks (CNNs) and automatic classification methods (e.g. SVM) will extract characteristic features from the images to automatically detect the:

- flexible robot shape

- the hollow lumen edges positions (to be integrated with ESR5) and

- information on surrounding soft tissues shape and location.

RT performance will be achieved by parallel optimization loops.

Main institution and supervisor: POLIMI, E. De Momi

Secondary institution and supervisor: UNISTRA, M. de Mathelin

ESR: Jorge Lazo

ESR 7

Simultaneous tissue identification and mapping for autonomous guidance

One of the challenges of autonomous surgical devices is the ability to navigate towards the clinical target. Two aspects have to be addressed to answer that problem.

First of all, targeted disease has to be identified in the organ of interest. Second of all, because the large majority of luminal organs inside of the human body have a very complex geometry, real-time information about position of the device in the lumen is needed. In this project, a side-viewing OCT catheter will be used in conjunction with a robotic endoscope to collect images, which can be used for simultaneous assessment of the clinical status of tissue and recovering of information about the position of the instrument in the lumen.

The position mapping can be further improved by combining optical information with robotic measurements. Additional imaging and sensing modalities like electromagnetic tracking or IVUS may also be used.

Main institution and supervisor:UNISTRA, M. de Mathelin

Secondary institution and supervisor: UNIVR, P. Fiorini

ESR: Guiqiu Liao

ESR 8

Image-based tool tissue interaction estimation

Image-based tool tissue interaction estimation

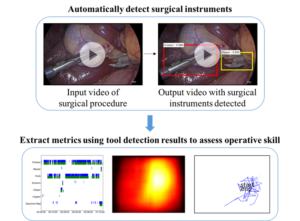

One of the keys to bring situational awareness to surgical robotics is the automatic recognition of the surgical workflow within the operating room. Indeed, human-machine collaboration requires the understanding of the activities taking place both outside the patient and inside the patient. In this project, we will focus on the modelling and recognition of tool -tissue interactions during surgery.

Based on a database of videos from one type of surgery, we will develop a statistical model to represent the actions performed by the endoscopic tools on the anatomy. We will link this model both to formal procedural knowledge describing the surgery (e.g. an ontology) and to digital signals (such as the endoscopic video) in order to provide information that is human- understandable. Models and measurements coming from the robotic system will also be incorporated, in order to develop semi -supervised on unsupervised methods, thus limiting the need to annotate the endoscopic videos.

Applicants should have a MSc/MEng (or equivalent) in Engineering, Computer Science, Mathematics or related disciplines. Applicants must have strong programming skills and background in Computer Vision and Machine Learning.

Main institution and supervisor: UNISTRA, N. Padoy

Secondary institution and supervisor: POLIMI, G. Ferrigno

ESR: Luca Sestini

ESR 9

Surgical episode segmentation from multi-modal data

In order to decide which actions to perform, an autonomous robot must be able to reliably recognize the current surgical state or phase it is in . This is especially true in a context of shared autonomy, where part of the procedure is still done by a human operator, or if the robot is to intelligently/semi-automatically assist manual gestures performed by a surgeon. To this end, deep learning methods (e.g. combined CNN for video and RNN for lower dimensionality data) will be applied for extracting the relevant information. The originality of this ESR project is that multi-modal data will be considered: building from previous developments on phase detection in endoscopic video data, the algorithms will be augmented with data coming from intra -operative sensors such as EM trackers, Ultrasound images, pre-operative data. Feature detectors and descriptors will be developed that are able to perform optimal discrimination of different areas of interest and for reducing data dimensions and thus improving the computational performance for intra-operative applications.

Since methods developed in this ESR are trained on multi-modal data, they may be adapted and applied both to intraluminal procedure based on video feedback (i.e. colonoscopy and ureteroscopy) but also to cardiovascular catheterization.

Applicants should have a MSc/MEng (or equivalent) in Engineering, Computer Science, Mathematics or related disciplines. Applicants must have strong programming skills and background in Computer Vision and Machine Learning.

Main institution and supervisor: UNIVR, D. Dall’ Alba

Secondary institution and supervisor: UNISTRA, N. Padoy

ESR: Sanat Ramesh

ESR 10

Automatic handsfree visualization of a 6 DoF agent within a complex anatomical space

Where ATLAS develops technology to steer flexible instruments through lumens, ESR10 develops an intuitive graphical interface (GUI) to supervise this execution.

Where ATLAS develops technology to steer flexible instruments through lumens, ESR10 develops an intuitive graphical interface (GUI) to supervise this execution.

- Computer tools for 3D visualization are very powerful allowing visualization from arbitrary viewpoint. However, managing and understanding this viewpoint is tedious and tiresome and aggravated when it concerns a moving/deformable scene. To avoid users needing to continuously manipulate the viewpoint this ESR will develop technology for

• hands-free automatic visualization of the scene; updates of viewpoint happens following the same rules as an expert;

• automatically setting local transparencies to reduce viewpoint changes, e.g. which would be done to perceive relative positions, understand contact locations or avoid occlusions. Assumable when initiated by oneself, these changes are difficult to follow if initiated by others. The system must thus adequately guess the operator’s visualisation needs.

• adjusting the view and perspective depending on the tracked path and mapping (SLAM). This calls for an intelligent system that deforms the run path automatically, so that it is always visible. In the case of a bowel exploration, the system would present an unraveled path, but would respect textures and features of the walls, thus always achieving proper visualization independently of the complexity of the trajectory, full of occlusions.

This ESR will work closely with ESR9 as surgical episodes form key inputs for an intelligent visualisation system.

Main institution and supervisor: UPC, A. Casals

Secondary institution and supervisor: SSSA, A. Menciassi

ESR: Martina Finocchiaro

ESR 11

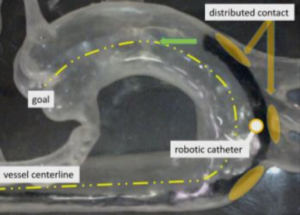

Control of multi-DOF catheters in an unknown environment

Control of multi-DOF catheters in an unknown environment

The interaction between a flexible catheter and a deformable lumen is extremely complex as it is affected by slack, friction and a time-varying contact state. ESR11 focuses first on devising controllers for the distal robot part. The problem of deriving a controller that behave robust independent of contact state and actuator limitations (on power and range) is still unsolved.

This will require adjusting the kinematic model to governing contact point/forces which can be estimated by fusion proprioception (ESR3) and RT reconstruction (ESR5, ESR6). Second, to establish a desirable global shape a multi-objective control problems is proposed in order to trade off:

- the amplitude and direction of forces applied on tissue;

- the need to avoid specific areas (e.g. arterial calcification);

- the desire to include suggestions by users e.g. provided via teleoperation or shared control.

Main institution and supervisor: KU Leuven, E. Vander Poorten

Secondary institution and supervisor: TU Delft, J.Dankelman

ESR: Di Wu

ESR 12

Distributed follow-the leader control for minimizing tissue forces during soft-robotic endoscopic locomotion through fragile tubular environment

Using prior experience on developing snake-like instruments for skull base surgery, ESR12 will elaborate these mechanical concepts into advanced soft-robotic endoscopes able to propel themselves forward though fragile tubular anatomic environments to hard-to-reach locations in the body. The ESR has two main goals:

- to use advanced 3D-printing to create novel snake-like endoscopic frame-structures that can be easily printed in one printing step without need for assembly, and that easily integrate cameras, actuators, biopsy channels and glass fibres. FEM-simulations to optimize a) shapes that are easy to bend yet hard to twist and compress (e.g. by using helical shapes), b) minimal distributions of actuators enabling complex and precisely controlled motion.

- to develop follow-the-leader locomotion schemes for moving through fragile tubular environments (e.g. colon or ureter) and evaluate this ex-vivo in anatomic tissue phantoms.

Main institution and supervisor: TU Delft, J. Dankelman

Secondary institution and supervisor: POLIMI, G. Ferrigno

ESR: Chun-Feng Lai

ESR 13

Path planning and real-time re-planning

Path planning and real-time re-planning

Given the deformable nature of the surroundings, RT planning and control is needed in order to guarantee that a flexible robot reaches a target site with a certain desired pose. ESR 13 will implement an accurate kinematic and dynamic model of the flexible robot incorporating knowledge on the robot limitations right in the planning algorithm so that the best paths are executed

- pre-operatively, considering the constraints on allowable paths, the location of the anatomic target and

- intra-operatively including the uncertainties in the adopted (and identified) flexible robot model and of the collected sensor readings (ESR5).

Advanced exploration approaches will be adapted to each specific clinical scenario, its constraints as well as the robot constraints such as its manipulability. Specific clinically relevant optimality criteria will be identified and integrated. This methods could try to keep away sharp parts of the instrument (e.g. tip) from lumen edges. RT capabilities will grant the possibility to re-plan the path during the actual operation.

Main institution and supervisor: POLIMI, E. De Momi

Secondary institution and supervisor: TU Delft, J. Dankelman

ESR: Zhen Li

ESR 14

Automatic endoscope repositioning with respect to the surgical task

Surgery often involves performing delicate operations with two hands or instruments. Those operations are typically even more difficult when considering MIS done in an intraluminal setting. This is due to the limited controllability of the flexible instruments and to the restricted access to the surgical site. Moreover, humans experience problems in executing complex tasks with flexible multi-arm endoscopes because of the large number of DOFs and the coupling between DOFs. Starting a surgical gesture in an unfavourable position may necessitate an interruption for repositioning, which is not always acceptable clinically.

ESR14 will develop a set of algorithms for intelligent repositioning of the endoscope and its arms in a favourable position. First, the intended gesture is detected using a task model combined with intraluminal sensing. Then, planning and control algorithms are developed to position the system such that the gesture can be optimally performed. While ESR14 aims to develop a semi-automatic robotic repositioning in where robot and operator collaborate. This is a large step towards full autonomy. The main target will be Colonoscopy and Gastroscopy, where robots are usually more complex, but the methods developed will be generic and therefore applicable to other scenarios.

Main institution and supervisor: UNISTRA, M. de Mathelin

Secondary institution and supervisor: KU Leuven, J. de Schutter

ESR: Fernando Herrera

ESR 15

Optimal learning method for autonomous control and navigation

Learning optimal control strategies for autonomous navigation and on-line decision making is a challenging problem. Currently 2 main strategies could be adopted: learning from data acquired during the execution of surgical procedure by expert surgeon or learning by experimentation.

ESR15 will compare motion control strategies for intraluminal navigation learned from expert data with the ones learned in simulated environment. From these results, it will be possible to find the optimal control strategies for different clinical scenarios and considering specific robotic configuration. The trajectory planning methods developed in ESR9 will be used to define an initial trajectory for the autonomous navigation. The performance of the different approaches will be evaluated in a realistic setting (physical phantoms) and in a simulated environment using a set of objective evaluation metrics.

An integrated testing environment, including advanced visualization, will be developed to improve the evaluation and testing of different methods (extending/integrating the results of ESR10). The proposed navigation strategies will be tested in the colonoscopy and ureteroscopy clinical scenarios, but possible extensions to cardiovascular catheterization will be considered.

Applicants should have a MSc/MEng (or equivalent) in Engineering, Computer Science, Mathematics or related disciplines. Applicants must have strong programming skills and background in Computer Vision and Machine Learning.

Main institution and supervisor: UNIVR, P. Fiorini

Secondary institution and supervisor: UPC, A. Casals

ESR: Ameya Pore